UH Seidman Cancer Center and Case Western Reserve University Collaborate on AI Tool to Predict Treatment Response

February 08, 2021

Tool uses outcomes data from more than 700 UH Seidman oropharyngeal cancer patients

Innovations in Cancer | Winter 2021

Which cancer patients are most likely to respond well to costly drugs? It’s a weighty question that often doesn’t have a clear answer, due to limitations in current tools.

Ted Teknos, MD

Ted Teknos, MD Anant Madabhushi , PhD

Anant Madabhushi , PhDTake the case of oropharyngeal cancer, says Ted Teknos, MD, President and Scientific Director of UH Seidman Cancer Center and Professor of Otolaryngology and Head & Neck Surgery at Case Western Reserve University School of Medicine.

“There's microsatellite instability staining and also PDL1 staining,” he says. “Those are the standard markers that we use. High microsatellite instability or a very high percentage of staining with PDL1 predicts a positive response to immunotherapy compounds. But very few tumors kind of reach that level. And many tumors respond even when they don't have a high amount of standing. So it's not a very reliable way to determine which patients should receive immune checkpoint inhibitors.”

To address this problem, Dr. Teknos is working with Anant Madabhushi, PhD, Professor of Biomedical Engineering at Case Western Reserve University and head of the university’s Center for Computational Imaging and Personalized Diagnostics, to develop an artificial intelligence tool to predict outcome and response to therapy for oropharyngeal tumors.

The tool in development relies on both pathology and radiology images, Dr. Teknos says. Importantly, it uses images produced with straightforward staining methods, as opposed to immunohistochemistry, reducing costs. These routinely acquired pathology and radiology images then undergo sophisticated computer analysis, with the computer able to go deep within the images to see important clinical markers beyond the capability of the human eye.

“Our focus has been on using these tools to really integrate patterns that are subtle or sub-visual, that the human eye may not be able to prise out or appreciate,” Dr. Madabhushi says. “The machine is able to go in and prise out these more subtle patterns, quantitative descriptors relating to disease, diagnosis, disease prognosis and treatment response.”

As more data and more images are added to the tool, the computer adjusts its predictions accordingly.

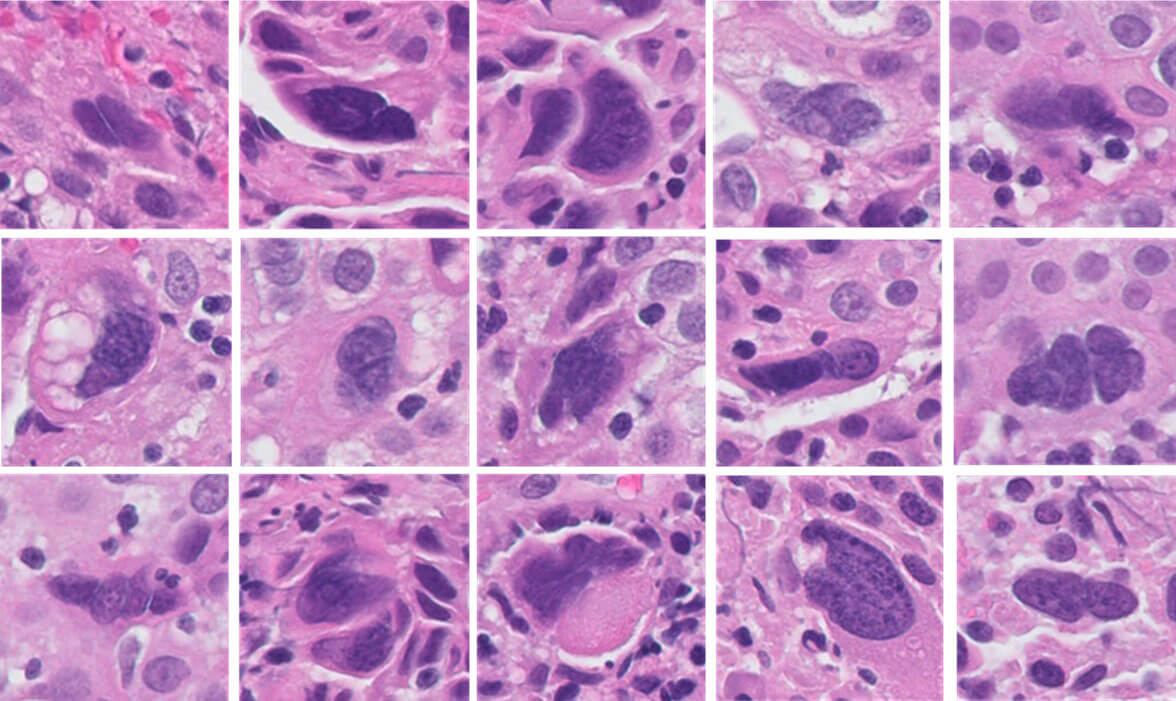

Caption: Computer-Aided Image Analysis Quantitation of Tumor Cell Multinucleation in p16 Positive Oropharyngeal Squamous Cell Carcinoma

Caption: Computer-Aided Image Analysis Quantitation of Tumor Cell Multinucleation in p16 Positive Oropharyngeal Squamous Cell Carcinoma“The computer looks for those features that indicate that someone is likely to respond to immunotherapy,” Dr. Teknos says. “Then as new features are added, the algorithm learns, so it becomes more and more specific over time. The hope is that we'll be able to predict much more accurately than our current standards which patients should get these checkpoint inhibitors and which would benefit from other types of treatment.”

Importantly, the AI system under development uses not only pathology and radiology images of the oropharyngeal tumor itself, but also images of the area around the tumor.

“In the pathology, the computer can tell right away the pattern of immune cells in and around the tumor,” Dr. Teknos says. “When the immune cells are in certain places, you're more likely to respond to the tumor than if it's in different places, or if they're in very small nests as opposed to big clumps of cancer. It’s the same in the radiology, in terms of edges of the tumor and also the blood flow in and around the tumor to determine responses to therapy. The amazing part of it is the computing power can take all these pieces of information and just instantly make these determinations, as opposed to us mere humans.”

The initial phase to build the oropharyngeal cancer AI tool is complete. Dr. Teknos and Dr. Madabhushi have input pathology and radiology images from 350 oral cancer patients from UH Seidman Cancer Center and an additional 350 tonsil cancer patients. These patients have known outcomes, but they have been blinded from Dr. Madabhushi’s AI team.

“They run the analysis and create the model,” Dr. Teknos says. “That’s how they test it and validate it. The next step after these testing and validation steps is to test it prospectively.”

As part of a larger, multi-institutional grant from the National Cancer Institute, Dr. Teknos and Dr. Madabhushi are collaborating to validate this approach in oropharyngeal cancers from multiple different sites across two continents.

“We want to see in patients who are just about to start treatment, through the biopsies, just how predictive it is in that actual patient population,” Dr. Teknos says.

As this tool moves toward clinical use, Dr. Teknos says he hopes others like it will be part of a trend toward enhanced focus on objective data in medicine.

“Right now, medicine is still very much an art,” he says. “You read the data and you interpret it and you give your opinions as an individual practitioner. But having this kind of objective data that has lots of inputs into it is really the gold standard. Or at least will be the gold standard.”